配置清单

使用声明式的配置文件描述你需要的基础设施与集群

Pigsty 遵循 IaC 与 GitOPS 的理念:使用声明式的 配置清单 描述整个环境,并通过 幂等剧本 来实现。

用户用声明的方式通过 参数 来描述自己期望的状态,而剧本则以幂等的方式调整目标节点以达到这个状态。 这类似于 Kubernetes 的 CRD & Operator,然而 Pigsty 在裸机和虚拟机上,通过 Ansible 实现了这样的功能。

Pigsty 诞生之初是为了解决超大规模 PostgreSQL 集群的运维管理问题,背后的想法很简单 —— 我们需要有在十分钟内在就绪的服务器上复刻整套基础设施(100+数据库集群 + PG/Redis + 可观测性)的能力。 任何 GUI + ClickOps 都无法在如此短的时间内完成如此复杂的任务,这让 CLI + IaC 成为唯一的选择 —— 它提供了精确,高效的控制能力。

配置清单 pigsty.yml 文件描述了整个部署的状态,无论是 生产环境(prod),预发环境(staging), 测试环境(test),还是 开发环境(devbox),

基础设施的区别仅在于配置清单的不同,而部署交付的逻辑则是完全相同的。

您可以使用 git 对这份部署的 “种子/基因” 进行版本控制与审计,而且,Pigsty 甚至支持将配置清单以数据库表的形式存储在 PostgreSQL CMDB 中, 更进一步从 Infra as Code 升级为 Infra as Data,无缝与您现有的工作流程集成与对接。

IaC 面向专业用户与企业场景而设计,但也针对个人开发者,SMB 进行了深度优化。 即使您并非专业 DBA,也无需了解这几百个调节开关与旋钮,所有参数都带有表现良好的默认值, 您完全可以在 零配置 的情况下,获得一个开箱即用的单机数据库节点; 简单地再添加两行 IP 地址,就能获得一套企业级的高可用的 PostgreSQL 集群。

以下面的默认配置片段为例,这段配置描述了一个节点 10.10.10.10,其上安装了 INFRA、NODE、ETCD 和 PGSQL 模块。

# 监控、告警、DNS、NTP 等基础设施集群...

infra: { hosts: { 10.10.10.10: { infra_seq: 1 } } }

# minio 集群,兼容 s3 的对象存储

minio: { hosts: { 10.10.10.10: { minio_seq: 1 } }, vars: { minio_cluster: minio } }

# etcd 集群,用作 PostgreSQL 高可用所需的 DCS

etcd: { hosts: { 10.10.10.10: { etcd_seq: 1 } }, vars: { etcd_cluster: etcd } }

# PGSQL 示例集群: pg-meta

pg-meta: { hosts: { 10.10.10.10: { pg_seq: 1, pg_role: primary }, vars: { pg_cluster: pg-meta } }

要真正安装这些模块,执行以下剧本:

./infra.yml -l 10.10.10.10 # 在节点 10.10.10.10 上初始化 infra 模块

./etcd.yml -l 10.10.10.10 # 在节点 10.10.10.10 上初始化 etcd 模块

./minio.yml -l 10.10.10.10 # 在节点 10.10.10.10 上初始化 minio 模块

./pgsql.yml -l 10.10.10.10 # 在节点 10.10.10.10 上初始化 pgsql 模块

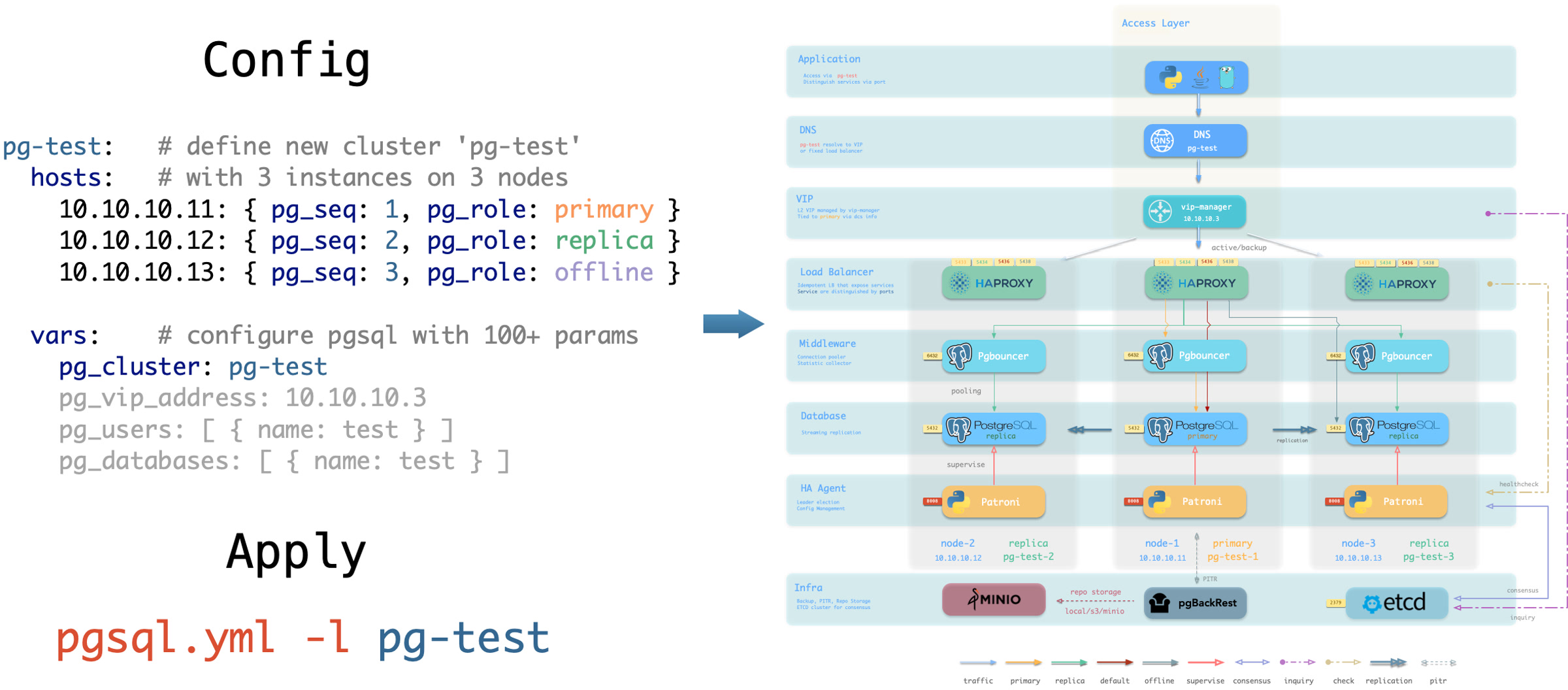

您可以声明 PostgreSQL 数据库集群,在多个节点上安装 PGSQL 模块,并使其成为一个服务单元:

例如,要在以下三个已被 Pigsty 纳管的节点上,部署一个使用流复制组建的三节点高可用 PostgreSQL 集群,

您可以在配置文件 pigsty.yml 的 all.children 中添加以下定义:

pg-test:

hosts:

10.10.10.11: { pg_seq: 1, pg_role: primary }

10.10.10.12: { pg_seq: 2, pg_role: replica }

10.10.10.13: { pg_seq: 3, pg_role: offline }

vars: { pg_cluster: pg-test }

定义完后,可以使用 剧本 将集群创建:

bin/pgsql-add pg-test # 创建 pg-test 集群

你可以使用不同的实例角色,例如 主库(primary),从库(replica),离线从库(offline),延迟从库(delayed),同步备库(sync standby); 以及不同的集群:例如 备份集群(Standby Cluster),Citus 集群,甚至是 Redis / MinIO / Etcd 集群

您不仅可以使用声明式的方式定义集群,还可以定义集群中的数据库、用户、服务、HBA 规则等内容,例如,下面的配置文件对默认的 pg-meta 单节点数据库集群的内容进行了深度定制:

包括:声明了六个业务数据库与七个业务用户,添加了一个额外的 standby 服务(同步备库,提供无复制延迟的读取能力),定义了一些额外的 pg_hba 规则,一个指向集群主库的 L2 VIP 地址,与自定义的备份策略。

pg-meta:

hosts: { 10.10.10.10: { pg_seq: 1, pg_role: primary , pg_offline_query: true } }

vars:

pg_cluster: pg-meta

pg_databases: # define business databases on this cluster, array of database definition

- name: meta # REQUIRED, `name` is the only mandatory field of a database definition

baseline: cmdb.sql # optional, database sql baseline path, (relative path among ansible search path, e.g files/)

pgbouncer: true # optional, add this database to pgbouncer database list? true by default

schemas: [pigsty] # optional, additional schemas to be created, array of schema names

extensions: # optional, additional extensions to be installed: array of `{name[,schema]}`

- { name: postgis , schema: public }

- { name: timescaledb }

comment: pigsty meta database # optional, comment string for this database

owner: postgres # optional, database owner, postgres by default

template: template1 # optional, which template to use, template1 by default

encoding: UTF8 # optional, database encoding, UTF8 by default. (MUST same as template database)

locale: C # optional, database locale, C by default. (MUST same as template database)

lc_collate: C # optional, database collate, C by default. (MUST same as template database)

lc_ctype: C # optional, database ctype, C by default. (MUST same as template database)

tablespace: pg_default # optional, default tablespace, 'pg_default' by default.

allowconn: true # optional, allow connection, true by default. false will disable connect at all

revokeconn: false # optional, revoke public connection privilege. false by default. (leave connect with grant option to owner)

register_datasource: true # optional, register this database to grafana datasources? true by default

connlimit: -1 # optional, database connection limit, default -1 disable limit

pool_auth_user: dbuser_meta # optional, all connection to this pgbouncer database will be authenticated by this user

pool_mode: transaction # optional, pgbouncer pool mode at database level, default transaction

pool_size: 64 # optional, pgbouncer pool size at database level, default 64

pool_reserve: 32 # optional, pgbouncer pool size reserve at database level, default 32

pool_size_min: 0 # optional, pgbouncer pool size min at database level, default 0

pool_connlimit: 100 # optional, max database connections at database level, default 100

- { name: grafana ,owner: dbuser_grafana ,revokeconn: true ,comment: grafana primary database }

- { name: bytebase ,owner: dbuser_bytebase ,revokeconn: true ,comment: bytebase primary database }

- { name: kong ,owner: dbuser_kong ,revokeconn: true ,comment: kong the api gateway database }

- { name: gitea ,owner: dbuser_gitea ,revokeconn: true ,comment: gitea meta database }

- { name: wiki ,owner: dbuser_wiki ,revokeconn: true ,comment: wiki meta database }

pg_users: # define business users/roles on this cluster, array of user definition

- name: dbuser_meta # REQUIRED, `name` is the only mandatory field of a user definition

password: DBUser.Meta # optional, password, can be a scram-sha-256 hash string or plain text

login: true # optional, can log in, true by default (new biz ROLE should be false)

superuser: false # optional, is superuser? false by default

createdb: false # optional, can create database? false by default

createrole: false # optional, can create role? false by default

inherit: true # optional, can this role use inherited privileges? true by default

replication: false # optional, can this role do replication? false by default

bypassrls: false # optional, can this role bypass row level security? false by default

pgbouncer: true # optional, add this user to pgbouncer user-list? false by default (production user should be true explicitly)

connlimit: -1 # optional, user connection limit, default -1 disable limit

expire_in: 3650 # optional, now + n days when this role is expired (OVERWRITE expire_at)

expire_at: '2030-12-31' # optional, YYYY-MM-DD 'timestamp' when this role is expired (OVERWRITTEN by expire_in)

comment: pigsty admin user # optional, comment string for this user/role

roles: [dbrole_admin] # optional, belonged roles. default roles are: dbrole_{admin,readonly,readwrite,offline}

parameters: {} # optional, role level parameters with `ALTER ROLE SET`

pool_mode: transaction # optional, pgbouncer pool mode at user level, transaction by default

pool_connlimit: -1 # optional, max database connections at user level, default -1 disable limit

- {name: dbuser_view ,password: DBUser.Viewer ,pgbouncer: true ,roles: [dbrole_readonly], comment: read-only viewer for meta database}

- {name: dbuser_grafana ,password: DBUser.Grafana ,pgbouncer: true ,roles: [dbrole_admin] ,comment: admin user for grafana database }

- {name: dbuser_bytebase ,password: DBUser.Bytebase ,pgbouncer: true ,roles: [dbrole_admin] ,comment: admin user for bytebase database }

- {name: dbuser_kong ,password: DBUser.Kong ,pgbouncer: true ,roles: [dbrole_admin] ,comment: admin user for kong api gateway }

- {name: dbuser_gitea ,password: DBUser.Gitea ,pgbouncer: true ,roles: [dbrole_admin] ,comment: admin user for gitea service }

- {name: dbuser_wiki ,password: DBUser.Wiki ,pgbouncer: true ,roles: [dbrole_admin] ,comment: admin user for wiki.js service }

pg_services: # extra services in addition to pg_default_services, array of service definition

# standby service will route {ip|name}:5435 to sync replica's pgbouncer (5435->6432 standby)

- name: standby # required, service name, the actual svc name will be prefixed with `pg_cluster`, e.g: pg-meta-standby

port: 5435 # required, service exposed port (work as kubernetes service node port mode)

ip: "*" # optional, service bind ip address, `*` for all ip by default

selector: "[]" # required, service member selector, use JMESPath to filter inventory

dest: default # optional, destination port, default|postgres|pgbouncer|<port_number>, 'default' by default

check: /sync # optional, health check url path, / by default

backup: "[? pg_role == `primary`]" # backup server selector

maxconn: 3000 # optional, max allowed front-end connection

balance: roundrobin # optional, haproxy load balance algorithm (roundrobin by default, other: leastconn)

options: 'inter 3s fastinter 1s downinter 5s rise 3 fall 3 on-marked-down shutdown-sessions slowstart 30s maxconn 3000 maxqueue 128 weight 100'

pg_hba_rules:

- {user: dbuser_view , db: all ,addr: infra ,auth: pwd ,title: 'allow grafana dashboard access cmdb from infra nodes'}

pg_vip_enabled: true

pg_vip_address: 10.10.10.2/24

pg_vip_interface: eth1

node_crontab: # make a full backup 1 am everyday

- '00 01 * * * postgres /pg/bin/pg-backup full'

您还可以通过声明式的配置,深度定制 Pigsty 的访问控制能力。例如下面的配置文件对 pg-meta 集群进行了深度安全定制:

使用三节点核心集群模板:crit.yml,确保数据一致性有限,故障切换数据零丢失。

启用了 L2 VIP,并将数据库与连接池的监听地址限制在了本地环回 IP + 内网 IP + VIP 三个特定地址。

模板强制启用了 Patroni 的 SSL API,与 Pgbouncer 的 SSL,并在 HBA 规则中强制要求使用 SSL 访问数据库集群。

同时还在 pg_libs 中启用了 $libdir/passwordcheck 扩展,来强制执行密码强度安全策略。

最后,还单独声明了一个 pg-meta-delay 集群,作为 pg-meta 在一个小时前的延迟镜像从库,用于紧急数据误删恢复。

pg-meta: # 3 instance postgres cluster `pg-meta`

hosts:

10.10.10.10: { pg_seq: 1, pg_role: primary }

10.10.10.11: { pg_seq: 2, pg_role: replica }

10.10.10.12: { pg_seq: 3, pg_role: replica , pg_offline_query: true }

vars:

pg_cluster: pg-meta

pg_conf: crit.yml

pg_users:

- { name: dbuser_meta , password: DBUser.Meta , pgbouncer: true , roles: [ dbrole_admin ] , comment: pigsty admin user }

- { name: dbuser_view , password: DBUser.Viewer , pgbouncer: true , roles: [ dbrole_readonly ] , comment: read-only viewer for meta database }

pg_databases:

- {name: meta ,baseline: cmdb.sql ,comment: pigsty meta database ,schemas: [pigsty] ,extensions: [{name: postgis, schema: public}, {name: timescaledb}]}

pg_default_service_dest: postgres

pg_services:

- { name: standby ,src_ip: "*" ,port: 5435 , dest: default ,selector: "[]" , backup: "[? pg_role == `primary`]" }

pg_vip_enabled: true

pg_vip_address: 10.10.10.2/24

pg_vip_interface: eth1

pg_listen: '${ip},${vip},${lo}'

patroni_ssl_enabled: true

pgbouncer_sslmode: require

pgbackrest_method: minio

pg_libs: 'timescaledb, $libdir/passwordcheck, pg_stat_statements, auto_explain' # add passwordcheck extension to enforce strong password

pg_default_roles: # default roles and users in postgres cluster

- { name: dbrole_readonly ,login: false ,comment: role for global read-only access }

- { name: dbrole_offline ,login: false ,comment: role for restricted read-only access }

- { name: dbrole_readwrite ,login: false ,roles: [dbrole_readonly] ,comment: role for global read-write access }

- { name: dbrole_admin ,login: false ,roles: [pg_monitor, dbrole_readwrite] ,comment: role for object creation }

- { name: postgres ,superuser: true ,expire_in: 7300 ,comment: system superuser }

- { name: replicator ,replication: true ,expire_in: 7300 ,roles: [pg_monitor, dbrole_readonly] ,comment: system replicator }

- { name: dbuser_dba ,superuser: true ,expire_in: 7300 ,roles: [dbrole_admin] ,pgbouncer: true ,pool_mode: session, pool_connlimit: 16 , comment: pgsql admin user }

- { name: dbuser_monitor ,roles: [pg_monitor] ,expire_in: 7300 ,pgbouncer: true ,parameters: {log_min_duration_statement: 1000 } ,pool_mode: session ,pool_connlimit: 8 ,comment: pgsql monitor user }

pg_default_hba_rules: # postgres host-based auth rules by default

- {user: '${dbsu}' ,db: all ,addr: local ,auth: ident ,title: 'dbsu access via local os user ident' }

- {user: '${dbsu}' ,db: replication ,addr: local ,auth: ident ,title: 'dbsu replication from local os ident' }

- {user: '${repl}' ,db: replication ,addr: localhost ,auth: ssl ,title: 'replicator replication from localhost'}

- {user: '${repl}' ,db: replication ,addr: intra ,auth: ssl ,title: 'replicator replication from intranet' }

- {user: '${repl}' ,db: postgres ,addr: intra ,auth: ssl ,title: 'replicator postgres db from intranet' }

- {user: '${monitor}' ,db: all ,addr: localhost ,auth: pwd ,title: 'monitor from localhost with password' }

- {user: '${monitor}' ,db: all ,addr: infra ,auth: ssl ,title: 'monitor from infra host with password'}

- {user: '${admin}' ,db: all ,addr: infra ,auth: ssl ,title: 'admin @ infra nodes with pwd & ssl' }

- {user: '${admin}' ,db: all ,addr: world ,auth: cert ,title: 'admin @ everywhere with ssl & cert' }

- {user: '+dbrole_readonly',db: all ,addr: localhost ,auth: ssl ,title: 'pgbouncer read/write via local socket'}

- {user: '+dbrole_readonly',db: all ,addr: intra ,auth: ssl ,title: 'read/write biz user via password' }

- {user: '+dbrole_offline' ,db: all ,addr: intra ,auth: ssl ,title: 'allow etl offline tasks from intranet'}

pgb_default_hba_rules: # pgbouncer host-based authentication rules

- {user: '${dbsu}' ,db: pgbouncer ,addr: local ,auth: peer ,title: 'dbsu local admin access with os ident'}

- {user: 'all' ,db: all ,addr: localhost ,auth: pwd ,title: 'allow all user local access with pwd' }

- {user: '${monitor}' ,db: pgbouncer ,addr: intra ,auth: ssl ,title: 'monitor access via intranet with pwd' }

- {user: '${monitor}' ,db: all ,addr: world ,auth: deny ,title: 'reject all other monitor access addr' }

- {user: '${admin}' ,db: all ,addr: intra ,auth: ssl ,title: 'admin access via intranet with pwd' }

- {user: '${admin}' ,db: all ,addr: world ,auth: deny ,title: 'reject all other admin access addr' }

- {user: 'all' ,db: all ,addr: intra ,auth: ssl ,title: 'allow all user intra access with pwd' }

# OPTIONAL delayed cluster for pg-meta

pg-meta-delay: # delayed instance for pg-meta (1 hour ago)

hosts: { 10.10.10.13: { pg_seq: 1, pg_role: primary, pg_upstream: 10.10.10.10, pg_delay: 1h } }

vars: { pg_cluster: pg-meta-delay }

下面是一个四节点的 Citus 分布式集群的声明式配置:

all:

children:

pg-citus0: # citus coordinator, pg_group = 0

hosts: { 10.10.10.10: { pg_seq: 1, pg_role: primary } }

vars: { pg_cluster: pg-citus0 , pg_group: 0 }

pg-citus1: # citus data node 1

hosts: { 10.10.10.11: { pg_seq: 1, pg_role: primary } }

vars: { pg_cluster: pg-citus1 , pg_group: 1 }

pg-citus2: # citus data node 2

hosts: { 10.10.10.12: { pg_seq: 1, pg_role: primary } }

vars: { pg_cluster: pg-citus2 , pg_group: 2 }

pg-citus3: # citus data node 3, with an extra replica

hosts:

10.10.10.13: { pg_seq: 1, pg_role: primary }

10.10.10.14: { pg_seq: 2, pg_role: replica }

vars: { pg_cluster: pg-citus3 , pg_group: 3 }

vars: # global parameters for all citus clusters

pg_mode: citus # pgsql cluster mode: citus

pg_shard: pg-citus # citus shard name: pg-citus

patroni_citus_db: meta # citus distributed database name

pg_dbsu_password: DBUser.Postgres # all dbsu password access for citus cluster

pg_users: [ { name: dbuser_meta ,password: DBUser.Meta ,pgbouncer: true ,roles: [ dbrole_admin ] } ]

pg_databases: [ { name: meta ,extensions: [ { name: citus }, { name: postgis }, { name: timescaledb } ] } ]

pg_hba_rules:

- { user: 'all' ,db: all ,addr: 127.0.0.1/32 ,auth: ssl ,title: 'all user ssl access from localhost' }

- { user: 'all' ,db: all ,addr: intra ,auth: ssl ,title: 'all user ssl access from intranet' }

下面给出了 Redis 主从集群、哨兵集群、以及 Redis Cluster 的声明配置样例

redis-ms: # redis classic primary & replica

hosts: { 10.10.10.10: { redis_node: 1 , redis_instances: { 6379: { }, 6380: { replica_of: '10.10.10.10 6379' } } } }

vars: { redis_cluster: redis-ms ,redis_password: 'redis.ms' ,redis_max_memory: 64MB }

redis-meta: # redis sentinel x 3

hosts: { 10.10.10.11: { redis_node: 1 , redis_instances: { 26379: { } ,26380: { } ,26381: { } } } }

vars:

redis_cluster: redis-meta

redis_password: 'redis.meta'

redis_mode: sentinel

redis_max_memory: 16MB

redis_sentinel_monitor: # primary list for redis sentinel, use cls as name, primary ip:port

- { name: redis-ms, host: 10.10.10.10, port: 6379 ,password: redis.ms, quorum: 2 }

redis-test: # redis native cluster: 3m x 3s

hosts:

10.10.10.12: { redis_node: 1 ,redis_instances: { 6379: { } ,6380: { } ,6381: { } } }

10.10.10.13: { redis_node: 2 ,redis_instances: { 6379: { } ,6380: { } ,6381: { } } }

vars: { redis_cluster: redis-test ,redis_password: 'redis.test' ,redis_mode: cluster, redis_max_memory: 32MB }

下面给出了一个三节点的 Etcd 集群声明式配置样例:

etcd: # dcs service for postgres/patroni ha consensus

hosts: # 1 node for testing, 3 or 5 for production

10.10.10.10: { etcd_seq: 1 } # etcd_seq required

10.10.10.11: { etcd_seq: 2 } # assign from 1 ~ n

10.10.10.12: { etcd_seq: 3 } # odd number please

vars: # cluster level parameter override roles/etcd

etcd_cluster: etcd # mark etcd cluster name etcd

etcd_safeguard: false # safeguard against purging

etcd_clean: true # purge etcd during init process

下面给出了一个三节点的 MinIO 集群声明式配置样例:

minio:

hosts:

10.10.10.10: { minio_seq: 1 }

10.10.10.11: { minio_seq: 2 }

10.10.10.12: { minio_seq: 3 }

vars:

minio_cluster: minio

minio_data: '/data{1...2}' # 每个节点使用两块磁盘

minio_node: '${minio_cluster}-${minio_seq}.pigsty' # 节点名称的模式

haproxy_services:

- name: minio # [必选] 服务名称,需要唯一

port: 9002 # [必选] 服务端口,需要唯一

options:

- option httpchk

- option http-keep-alive

- http-check send meth OPTIONS uri /minio/health/live

- http-check expect status 200

servers:

- { name: minio-1 ,ip: 10.10.10.10 , port: 9000 , options: 'check-ssl ca-file /etc/pki/ca.crt check port 9000' }

- { name: minio-2 ,ip: 10.10.10.11 , port: 9000 , options: 'check-ssl ca-file /etc/pki/ca.crt check port 9000' }

- { name: minio-3 ,ip: 10.10.10.12 , port: 9000 , options: 'check-ssl ca-file /etc/pki/ca.crt check port 9000' }

使用声明式的配置文件描述你需要的基础设施与集群

使用 configure 脚本根据当前环境自动生成推荐的配置文件。

使用配置参数对 Pigsty 进行精细化定制

使用预制的配置模板,快速生成适配当前环境的配置文件

使用 PostgreSQL 作为 CMDB 元数据库,存储 Ansible 配置清单。